The question is can we identify “Boy” from “Alien”?

Face Recognition addresses “who is this identity” question. This is a 1:K matching problem. We have a database of K faces we have to identify whose image is the give input image.

Facenet is Tensorflow implementation of the face recognizer described in the paper “FaceNet: A Unified Embedding for Face Recognition and Clustering”.

FaceNet learns a neural network that encodes a face image into a vector of 128 numbers. By comparing two such vectors, we can then determine if two pictures are of the same identity. FaceNet is trained by minimizing the triplet loss. For more information on triplet loss refer https://machinelearning.wtf/terms/triplet-loss/

Since training requires a lot of data and a lot of computation, I haven’t trained it from scratch here.

I have used previously trained model. I have taken the inception networks model implementation and weights from 4th course deeplearning.ai “Convolutional Neural Networks” from Coursera.

The network architecture follows the Inception model from [Szegedy *et al.](https://arxiv.org/abs/1409.4842).

More details about inception v1 is in this blog https://www.analyticsvidhya.com/blog/2018/10/understanding-inception-network-from-scratch/

This network uses 96×96 dimensional RGB images as its input. It encodes each input face image into a 128-dimensional vector.

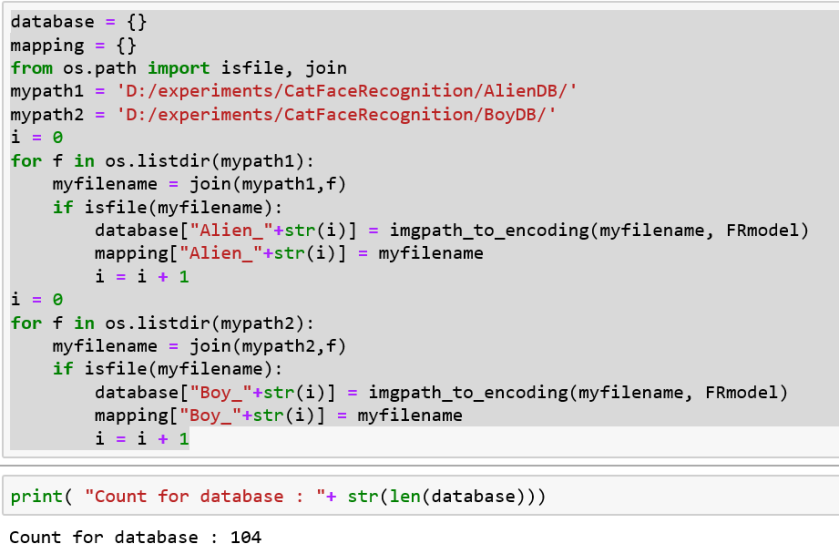

First, for each image of “Alien” and “Boy” (I have taken 52 images of each), I converted them into encoding and stored into a database.

Here is the code that does that

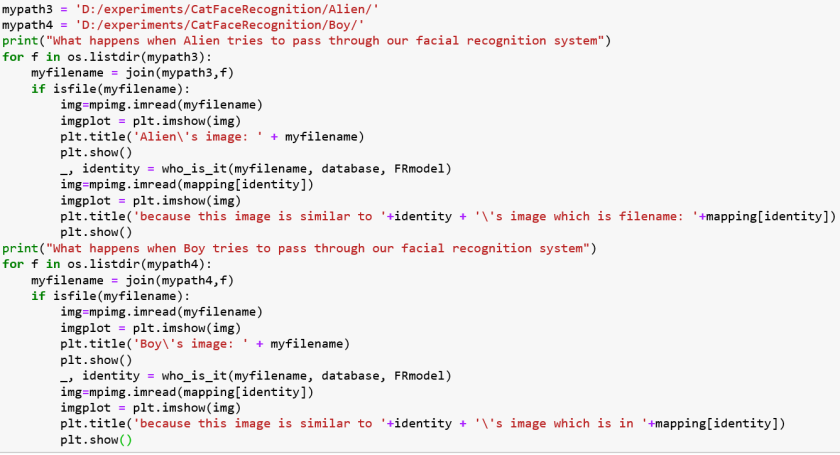

What happens when Alien and Boy will pass through our image recognition system?

For each of the images of “Alien” and “Boy”, first compute the target encoding of the image from image path. Find the encoding from the database that has smallest distance with the target encoding.

If minimum distance (L2 distance between the target “encoding” and the current “encoding” from the database) is greater than 0.7 we assume the face is not in the database.

When Alien tries to pass through our face recognition system

| Input Test Image of Alien | Result | Closest image |

|

Alien |  |

|

Alien |  |

|

Boy

|

|

|

Alien |  |

|

Alien |  |

|

Alien |  |

Note that there is no image in the database like the green eyed image. distance is 0.5105655. So ay be we can keep a cut off at 0.5 instead of 0.7

When Boy tries to pass through our face recognition system

| Input Test Image of Boy | Result | Closest image |

|

Alien

|

|

|

Boy

|

|

|

Boy |  |

|

Alien

|

|

|

Alien

|

|

|

Boy |  |

Results look pretty good.

Summary

- We should re-train facenet with Alien and Boy pictures to get better results.

- Image dimensions were only 96×96 so that could have thrown a lot of information away

- Model was trained on human faces which has different embeddings than cats

- I have split database images and final images based on dates on which pictures were taken assuming pictures of the same dates must be similar. On inspection, I found that in the cases were the final images are very different from images we added in database that is they were never seen before, the results are incorrect. This can be fixed by adding more different types of images in the database.

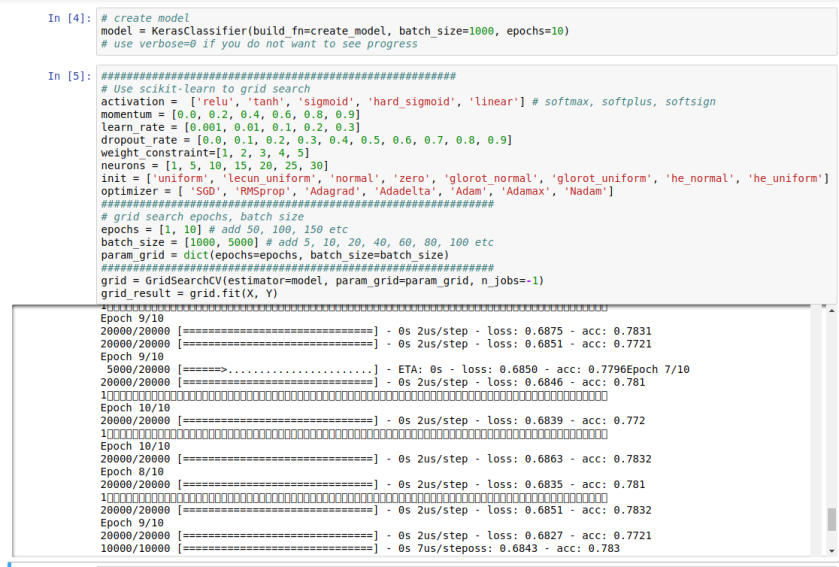

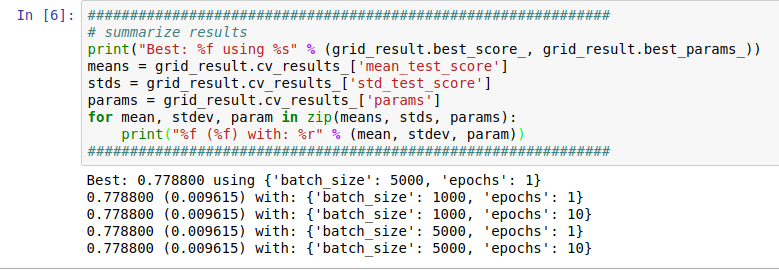

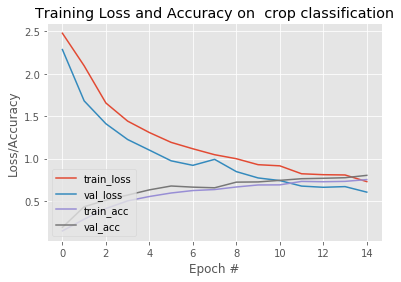

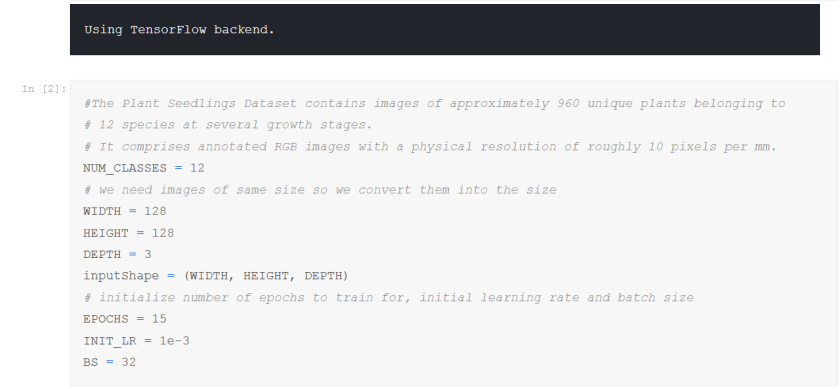

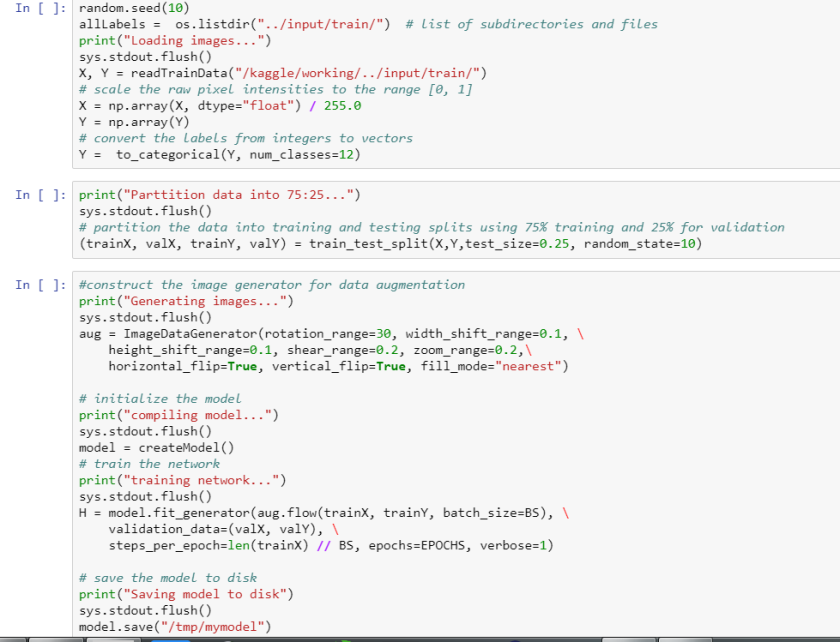

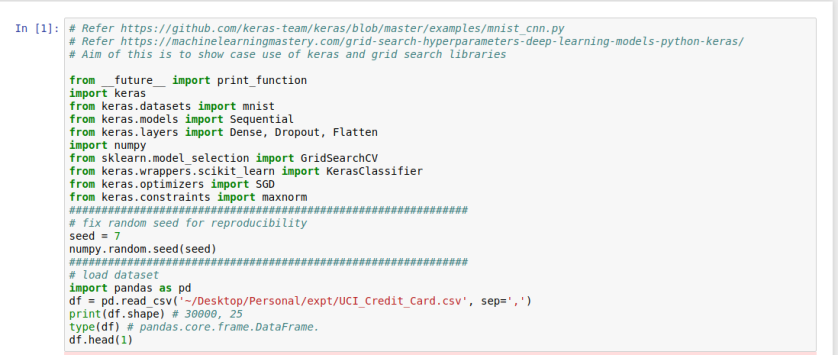

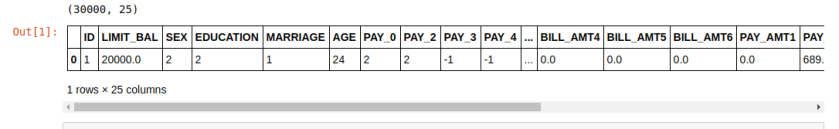

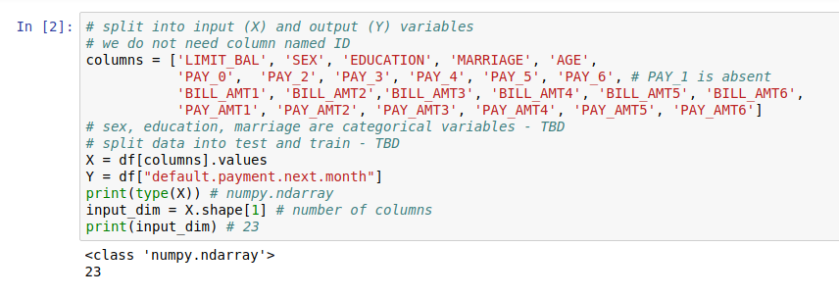

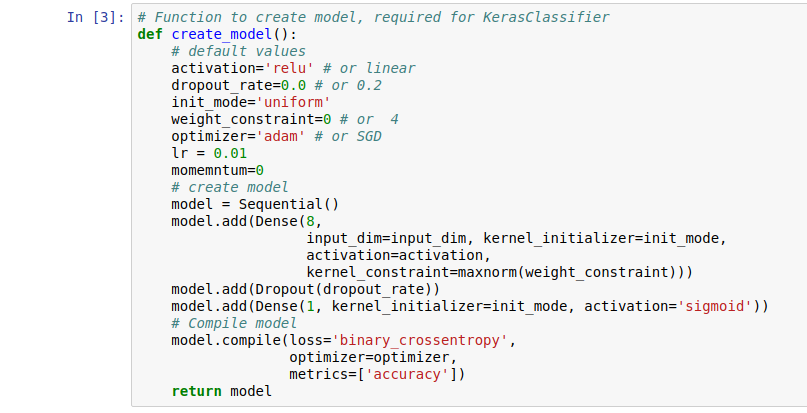

then we create a model and try to set some parameters like epoch, batch_size in the Grid Search.

then we create a model and try to set some parameters like epoch, batch_size in the Grid Search.